HR Pros' Top 10 Questions About AI, Answered

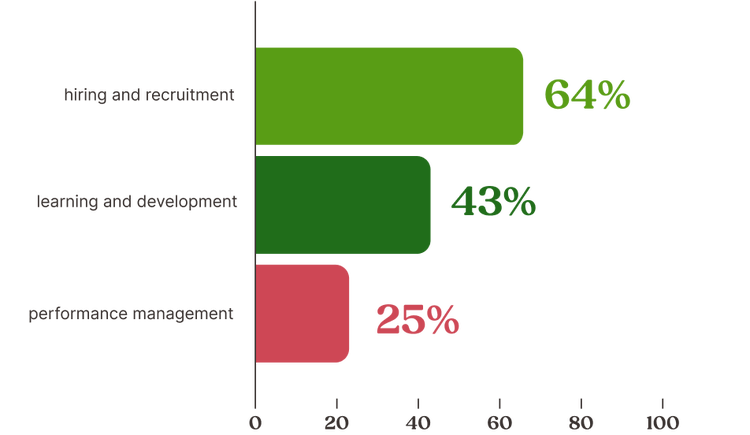

Most HR pros (75%) optimistically agree that as AI continues to advance over the next five years, human intelligence will become increasingly important in the workplace. But anxieties or confusion around the future of AI—and its immediate application—continue to hold many back from fully leveraging the benefits artificial intelligence can bring to the work of HR.

To address some of these concerns, we asked HR reps from around the world what they would ask an expert about AI and its relevance to their jobs. Here are 10 questions compiled from their responses, with answers from Alan Whitaker, Head of AI at BambooHR.

About the Expert

Prior to joining BambooHR as Head of AI in 2020, Alan founded several startups focused on AI, behavioral science, data visualization, and digital marketing. He also served as CTO of Affiliated Computer Services, a Fortune 500 company, and earned a bachelor’s degree in computer science from Brigham Young University.

Alan’s dedication to creating technology with soul strongly aligns with BambooHR’s ambition to provide HR software with heart.

About BambooHR

10 FAQs About AI, Answered by Alan Whitaker

How can AI simplify or automate aspects of HR?

But as far as how AI can play into making your life easier today, it’s helpful to conceptualize the categories of AI as modes of transportation: a bike, a car, or a plane. We recently shared a one-pager that goes into more detail, but I’ll give a quick overview:

- You’ve got your bike—general AI software like ChatGPT, Midjourney, or Office copilots.

- Then a car—specialized AI software for various roles, such as HR, sales, or support, from different software vendors.

- And then a plane—custom AI solutions built in-house.

Jet fuel is expensive, and you need a pilot’s license, so the third category is going to be the rarest mode of transportation for most HR teams, but everyone in HR should have their driver’s license. You should learn enough about AI that you’re able to work with partners in choosing the right technology that uses AI in productive and relevant ways.

But bikes are even more affordable and approachable. Everyone can ride a bike off-the-shelf, so to speak. Similarly, everyone can start experimenting with AI and doing those really addressable things, like generating interview questions or job descriptions.

Just remember: “Copilot, not autopilot.” You want to get into the mindset of working with AI as your copilot or thought partner—you’re not just handing over the controls and going for a ride. You’re using it to brainstorm, research, and increase your productivity as you create content.

These are the things that general AI tools can help you do today, which is especially helpful for smaller, single-person HR teams that don’t have the resources a larger organization might have to buy or build specialized AI solutions.

Interpersonal mediation is a critical HR function. Given the complexity of human interactions, will AI ever truly make HR positions obsolete?

Here’s something I want to plant in the background of this conversation: AI is a really multifaceted field that’s been coming into being for decades. Generative AI—like ChatGPT and Midjourney—is the application that's primarily leapt forward and most people have had personal experiences with it, but that’s only one facet. There are various ways we can use AI, which gets interesting when you talk about interpersonal mediation.

AI can be used to build a conversational interface, but we're not at a point where it has the emotional intelligence of a person to go in and understand context, nuance—all the things that go into human emotional needs.

Gen AI and LLMs (large language models) specifically are really just trained to string words together, and they're able to create surprisingly helpful combinations. They’re powerful, but they can't do all of the evaluation—they're not doing any real evaluation—of emotion.

In fact, back in March 2024, the European Parliament approved an Artificial Intelligence Act that prohibits employers from using AI to interpret the emotions of people. More specifically, the Act forbids “emotion recognition in the workplace and schools” and “AI that manipulates human behavior or exploits people’s vulnerabilities.”

This will be an interesting ongoing conversation because while I can understand the dark side of what these types of regulations seek to prevent—and I can see how it can feel like Big Brother, something watching you and gauging your emotions—I also wonder if AI can be helpful as an assistive technology for neurodivergence when it comes to perceiving emotion, identifying when a person may be feeling upset, etc.

It’s how you apply it that makes the difference, which makes this issue a perfect place for HR professionals in particular to lead.

Even if you’re working with an AI tool that was doing some of the conversation and eliciting some of the information in regards to interpersonal mediation, there’ll always be that opportunity for HR to be part of the strategic decision-making—the interpretation of emotions and the situation, and then the planning that comes after.

With employee feedback, for example, employees may express what they like or dislike, and that may have an emotional quality, but if we use AI to synthesize feedback themes around those likes and dislikes, would that be in violation of European law? I don’t believe so, because it’s not explicit sentiment analysis. Employees provide that feedback to the company and in using AI to categorize, we're not being surreptitious or trying to interpret employees’ emotional state.

Similarly, there are regulations around AI making material hiring and employee decisions, and for good reason. If a piece of technology was filtering in a way that was harmful to one protected class, that’d be bad. So human oversight remains imperative and irreplaceable.

The prospect of AI fully automating HR processes is concerning. Can we—or should we—prevent AI from completely taking over a human person doing an HR job?

AI won't take your job, but someone who uses it better might. There’s some healthy anxiety in that. Yes, this will change things, but HR is uniquely positioned to help your organizations and individuals navigate this change.

Those that can wisely apply AI will be ahead, and those who don’t—well, they’ll fall behind. It’s just one more way that companies and workers can increase productivity and create some competitive forces.

Can AI provide anything a human can't? Am I essentially working myself out of a job by using it?

Like all tools and technologies, AI changes what's possible and how we do things. At its best, technology makes it easier to accomplish more with the same amount of energy, or less energy. Obviously, you can do more with a forklift than without.

So ideally, we’re using technology to take care of the more mundane parts of our lives, which creates opportunities for us to make the contributions that humans—and even each individual—are uniquely positioned to make.

The goal of technology is to get us to a place of peak contribution, using appropriate automation. Again, we want a copilot, not an autopilot. It’s the combination of human and machine that makes the machine powerful, and vice versa. One isn’t more powerful without the other. There are different strengths and weaknesses of each, but together, those weaknesses can be compensated for while amplifying the strengths. To me, that means the human piece that only HR can provide will increase in importance.

Is AI ethical?

This is kind of like asking, “Is the internet ethical?” It depends how it’s used. There are great ways to use AI, and there are plenty of ways it can be used unethically. We can ask ourselves, “Has this particular model been trained ethically? Did it source information in a way that was fair and gave credit to authors?” These are the things the legal system is grappling with right now.

So there’s the ethics of how it’s trained, and then there’s the potential for it to reflect biases from the source material. It would be unethical to rely wholly on AI output without human evaluation. There are plenty of ways you could fall into a trap of using it unethically, but AI itself isn’t inherently ethical or not. It’s our application of it that can be classified as one or the other.

How do you handle employee privacy and data security when it comes to AI?

With this question, we can talk about making sure you opt out of using your data for the training of models. For some vendors, that’s the default, but other times, you may need to explicitly opt out and say, “Hey, don’t use my data for training.” Just being aware of that is an important part of information privacy and security.

Another thing to consider is do you trust the organization that’s agreed to not use your data? If someone were able to demonstrate that private data had been leaked into OpenAI, for example, that could be a catastrophic thing for them. Companies that are building LLMs should be very careful and attuned to those needs, but each organization has to decide who they trust.

You can have data protection agreements and other things with these types of models that would guarantee that privacy legally, but do you trust the vendor? What’s their reputation? Is the data pathway kept safe?

These questions aren’t that different from existing privacy and security concerns around HR data and HR Information Systems (HRIS). You don’t want a fly-by-night place storing your sensitive HR data, and it’s the same thing when you’re passing data to these models. Is it secure? And you can address that on a case-by-case basis as you’re looking at AI vendors.

There’s a quote I love from Jay Alammar at the enterprise AI platform Cohere. He says not to think of these large language models as standalone minds. Think of them as a component of an intelligent system.

AI is just one component, and you’ve got all these places where the data needs to flow in order to deliver value. We need to make sure we have that data protection in place just as we would with the cloud or any outsourced database service.

How does AI “learn”? Can it fact-check itself? How much should we trust AI output?

We could do multiple postgraduate courses to talk about if and how AI “learns.” But when we're talking about generative AI and large language models, they learn—or are trained—by taking language from available and hopefully ethical sources or public sources. Like a copy of the internet from a certain point in time—a snapshot. Taking from that, the LLM is trained mathematically to predict the next word in a sequence.

Then we can get into more traditional machine learning, where people do supervised learning. Unsupervised learning for AI is about finding latent patterns and exposing them, but with supervised learning you have a bunch of data where each row is an example. You have different fields and attributes, and a classification or an outcome that you designate as a truth set. You provide examples with certain attributes and the corresponding classifications, and you train to those examples.

Can AI fact-check itself? Yes and no. Gen AI and LLMs are not factually grounded by default.

“Linguistically feasible wanderings” is what they do. The way they string those words together tends to converge most often onto a right or good answer. Gen AI doesn’t have traditional reasoning or inherent accuracy, but again, if it's just a component of an intelligent system, you could have part of that system do some fact-checking.

As an example, that fact-checking piece can be human ratings of outputs—thumbs up, the answer was helpful or correct, or thumbs down, it wasn’t, and that human input is the authority. There could be automated metrics that flag things for human review.

We want to keep the human in the loop. Again, this is the collaboration of people and machines. It’s important for us to evaluate the quality or the factuality of what’s being produced. That goes back to the “copilot, not autopilot” idea.

What are the most impactful ways small HR teams can use AI?

The one-pager I mentioned earlier is still a great place to start for this question, but I also want to point out the leadership role HR can play in protecting their employees and company from AI fraud.

Earlier this year, a finance worker in Hong Kong was duped out of 200 million Hong Kong dollars (25.6 million USD) after being lured into a Zoom meeting with deepfaked colleagues and executives. This was an advanced heist and an extreme example, but it shows you what’s possible when someone is determined enough. You can’t take things at face value like you used to, and AI-literacy is becoming increasingly important.

By being trained on AI and the things to look out for, and by partnering with the security function of the company, HR can leverage their interpersonal expertise and internal comms processes to make sure everyone at the company is AI-literate and protected.

We’re reaching a time period of zero-trust information. In this generative space especially, we need to habitually ask ourselves, “Where did this information or image or video come from? What were the credentials? What were the processes?” For HR, that would be something to take the lead on that would have a hugely important impact.

What tasks is AI best suited for? What kinds of tasks aren't a good match for AI?

Making unilateral employment decisions is obviously a bad use case for AI. There may be contributions you could get from AI in the copilot sense, but you want to be careful of relying on it too much without human oversight. And that’s not just because AI can lead you astray—there are legal and compliance issues to consider as well.

Take the New York City hiring law, for example, that requires businesses to evaluate any AI technology they're using to ensure it doesn't disadvantage a protected class. There are a lot of very impactful decisions that are made in an HR context, and it’s up to HR to assess and justify any decision that’s made with AI input.

At a recent SHRM conference, SHRM CEO Johnny C. Taylor, Jr., talked about how, yes, there can be bias in AI, but there’s bias in humans too. Maybe together we can cover those gaps for each other.

With “headless resumes,” for example, we can avoid our own inherent biases while helping AI do the same. Yes, we shouldn’t trust AI without question, but don’t implicitly trust yourself either. A habit of checks and balances is mutually applicable.

How can HR professionals influence the direction AI is taking in this field?

HR is uniquely positioned to help organizations and individuals navigate this new technology. But to do that, one of the things they can do today is learn about it and experiment with it individually. Then from there, have conversations with their organization to figure out how to create safe environments for people to learn and experiment. Decide where these environments could maybe be applied or tested-piloted, and where it shouldn't.

That could look like a hackathon-style event or some sort of learning event where people take a problem and they go experiment and use the tools. That's one way to spur learning and experimentation.

Another part of HR’s involvement will be in setting and administering AI policy. There’s a lot HR can take the lead on here.

A Few Final Thoughts About Emerging Technology

New technology comes in waves, and public reaction always runs the gamut—excitement, concern, anger.

People were up in arms when the Jacquard loom came out in the early 1800s. It was taking away textile jobs, but it also democratized access to more affordable fabric, and with that surge, there were more jobs created in the end, even though those jobs looked different than before the new technology.

Then you’ve got, of course, the computer revolution, which also interestingly links back to the Jacquard loom invention. You’ve got the internet and cloud computing. (And of course, part of the internet’s legacy includes large language models.)

But AI feels bigger than any of the other waves I’ve been through. Maybe it’s just because I’m in the middle of it, but I recently attended SHRM’s AI+HR Project conference, and something one of the speakers shared really resonated with me.

Cassie Kozyrkov, the CEO of Data Scientific and former Chief Decision Scientist at Google, talked about how we’ve gotten used to what could not be automated, but many of those things are now on the table.

That’s the revolution going on here. All these things that software previously couldn't automate—many of them can now be accessed and run through systems that use generative AI.

We can’t always predict the ripple effects of new technology. Take AOL Instant Messenger (AIM) and the template it set for direct messaging features in all the social media and comms apps that came after it. And that started out as a skunkworks, under-the-radar project that almost got one of the developers fired.

What’s new is always met with resistance because it’s hard to predict the impact and because it’s easy to assume the worst. But great technology that makes our lives better has a way of sticking. I encourage you to continue educating yourself and your teams about AI, and staying as close to the forefront as possible as this latest technological revolution continues to take shape.

Discover How Much Easier HR Can Be

In BambooHR, everything works together to help you onboard new hires, deliver data insights, and help employees thrive. Best of all, it's easy and intuitive for busy HR teams!

Looking for More HR Insights? Keep Reading

First Impressions Are Everything: 44 Days to Make or Break a New Hire

70% of new hires decide whether a job is the right fit within the first month—including 29% who know within the first week. Onboarding makes all the difference.

Free Download: The HRIS Buyer's Guide

Our in-depth guide provides a comprehensive overview of all-in-one HRIS—plus tips on how to choose the right HRIS for your organization.

31% of HR Managers Say They Need Better Employee Data Protection

Discover insights into employee perception and experience with data security—and how to better protect your workforce data.